When we talk about upgrades to AI, there is no such thing, you are just changing the weighting in the networks, and it will produce different results, but there is always a trade-off. It may be one that is inconsequential and not a big risk [worklist and bone-age algorithms for instance], but certain things—large-vessel occulsion detection or intracranial hemorrhage—does have a big impact on resource allocation as well as what gets moved to the top of the list.

When that question was posed several years ago, some of you may have been in the audience at the RSNA along with Neville Irani, MD, associate professor of radiology at Kansas University Medical Center, and a member of the ACR Informatics Commission. You may even have been one of the audience members who raised their hand—but that was well before the Boeing 737 Max disaster. Dr. Irani, guest speaker at the SR-PSO Quality Forum session on Artificial Intelligence earlier this month, used that incident to illustrate why radiologists need to start thinking critically about how they will use some of the AI tools on the market while avoiding some of the pitfalls so that the field can be advanced.

“I think we need this expertise to avoid significant disasters,” Dr. Irani said. “We’ve always looked at aviation as an industry that has a lot of high-reliability constructs, but somehow it failed when it came to some of the software installed in the 737 Max and the lack of testing. No one thought that the introduction of this software would require retraining of the pilots and more extensive testing.”

Experience with radiology AI to date demonstrates widespread inconsistent results: SR users reported a 60% inconsistency rate, and respondents to an ACR survey reported a 93% inconsistency rate. Training in use of AI interfaces will be necessary, he said, but so will enhanced communication among users to point out problematic areas, including those that emerge after software updates. These need to be anticipated, and radiologists must be vigilant to recognize when performance drops off and take the steps to develop accountability: “This is where you can be led off the rails.”

Examples of Inconsistency

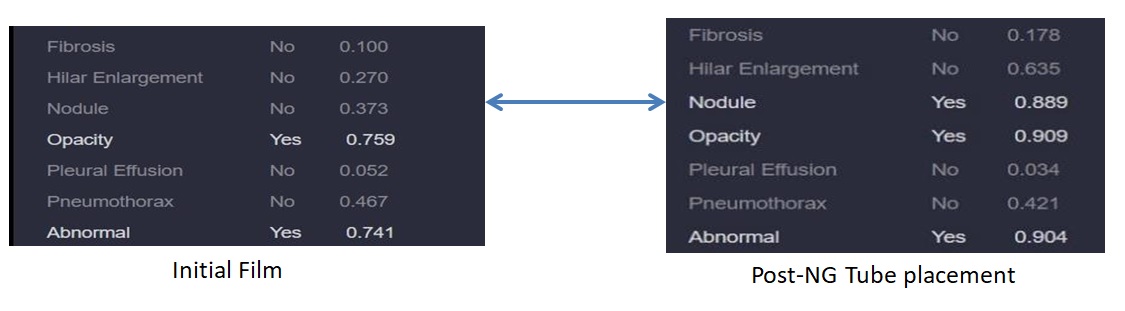

Dr. Irani reviewed several examples from the ED to illustrate his case for vigilance, including a supine single view x-ray acquired in the ED that was run through an AI algorithm trained to look for half a dozen findings. A second image from the same patient was run through the algorithm 8 minutes later, typical for a trauma workflow in the ED, and the second time it flagged the study as containing an infection that wasn’t present on the previous exam. The module also found (but did not clearly mark) a lung nodule on the second exam that was not seen on the first and may have been a misinterpretation of an overlapping rib.

“We really have a problem when we rely on these modules because they are inconsistent, they don’t understand what the concept of a nodule is,” Dr. Irani said. “They know how pixels are arranged, and can associate a high probability that this is associated with the mention of a nodule in a radiology report. Neural networks are basically fancy association machines; they do not understand medical context or concepts.”

A different portable single-view chest x-ray of a patient with a chest tube further illustrated the problem of inconsistent results. In this case, the portable modality software produced an edge-enhanced image as part of the dataset. When both images were sent through a pneumothorax algorithm, the first image produced a lot of abnormalities, including a highlighted pneumothorax, which was not evident on the edge-enhanced image. “It’s the same exposure with the filter put over it,” he said. “There is no real understanding and hence the inconsistency.”

False positives actually slow down workflow, something that happens frequently with some of the intracranial hemorrhage algorithms, Dr. Irani said. “These kinds of errors are not unique to the medical imaging space but also have presented in self-driving cars when street signs have something painted on them or have been modified in some way preventing the car from recognizing the stop signs—which is a problem,” he notes.

These examples illustrate the need to:

· identify weaknesses and develop good testing standards;

· integrate the clinical and imaging context for each module;

· find intuitive ways to point out findings from these exams—as in the example of the nodule, flagging the finding that caused the call so that it can be confirmed or refuted;

· make sure modules are tested on artifacts and technique-related problems by having a test to determine that these shortcomings have been overcome.

“We can safely adopt AI-assisted image analysis if we focus on having these retrospective lookbacks in each individual clinical setting, with narrow scope and dedicated oversight,” he said. This type of oversight will help prevent problems from propagating to the patient care setting when it is not helpful—mammography CAD was reimbursed for 10 years by CMS before it was understood to be not helpful. “We want to learn from that and avoid repeating this with artificial intelligence,” he said. “It’s important to have that clinical oversight to retest and iterate with the module developers to ensure that what they say is fixed actually is fixed.”

The Breakouts

After participants retired to virtual break-out “rooms” to discuss how they were using AI, Dr. Irani shared some valuable tidbits with members of one group such as: When AI algorithms are deployed to the cloud, unanticipated changes in performance can occur when the software is updated. “When we talk about upgrades to AI, there is no such thing, you are just changing the weighting in the networks, and it will produce different results,” he emphasized. “But there is always a trade-off. It may be one that is inconsequential and not a big risk [worklist and bone-age algorithms for instance], but certain things—large vessel occulsion detection or intracranial hemorrhage—does have a big impact on resource allocation as well as what gets moved to the top of the list."

“We really need to think through how we are going to figure out when these breaks occur and have an expectation to report from all colleagues,’” Dr. Irani suggested: “'The module doesn’t seem to be performing the same way it did before.’ The subsequent troubleshooting is much more complicated than your routine IT solution given how the software is running and how many dependencies are in play.”

Many groups reported experimenting with natural language processing applications, including worklist applications as well as the SR Coverys grant project to identify incidental findings on ED studies. “The noninterpretive side of applications for AI are really where people are focusing, and NLP is an area that has had a lot more research than the image interpretation side,” said Dr. Irani. “The neural networks are designed a little differently for image interpretation. They are convoluted neural networks with filters applied to the image versus NLP scenarios where you are using recurrent neural networks.”

Dr. Irani suggested that NLP is a fertile area to explore in the Strategic Radiology setting. “People have said you need large data sets, but a lot of the consistency in performance comes from smaller datasets that are well-curated and then combining that with multiple task-specialized networks. So, trying to get everything in one network that gets it right all the time is probably not a good strategy.”

Braden Shill, integration specialist at Mountain Medical, reported that he would like to explore AI-enhanced workflows to gather relevant priors for studies that are sent to teleradiology companies, and Dr. Irani suggested that AI also could be used to comb through the findings on those priors. “In fact there is a system that has been developed—and I think it is one of the larger groups that has done its internal AI development—in which they are going through the EMR history, the clinical history, and scouring the collective history of all exams the patient has had to present a short summary of who this patient is,” Dr. Irani said. “I was reviewing a case today where there was a uni-lateral ovary resection, and the assumption was made that both ovaries were gone, so it is important to have that, but you would have to go back to an exam from 2004 to see that history. It would take a while to do it manually, but this is where AI excels and is an opportunity to really develop value-add.”

In 2021, SR’s AI Workgroup, chaired by Dr. Wei Wei-Shen Chin, MD, President, Medical Center Radiology Group, and Dr. Rishi Seth (RADNTX) and facilitated by SR-PSO Director Lisa Mead, RN, and Arl Van Moore Jr, MD, SR-PSO Medical Director, has set the following action items:

· seek to identify technologies for development partnering,

· develop processes for implementation to improve quality and safety, and

· look at how they can be implemented within SR Connect to improve safety and quality and of course

· share best practices to promote excellence in patient care and develop the quality measures so that we can track impact on quality and safety.

“We have a good mix of physicians, IT people, and administrators in the AI Workgroup, and anyone interested in joining us is welcome,” said Ms. Mead. “The next step is to be aware of and involved in the process and try to get as many people to participate in the conversation as we possibly can.”

Hub is the monthly newsletter published for the membership of Strategic Radiology practices. It includes coalition and practice news as well as news and commentary of interest to radiology professionals.

If you want to know more about Strategic Radiology, you are invited to subscribe to our monthly newsletter. Your email will not be shared.